How to properly allocate CPU and memory resources

For the platform's proposed specifications for small, medium, large, and custom production environments, as well as the resource allocation methods for instances and ports, the following suggestions can be referenced for deployment.

TOC

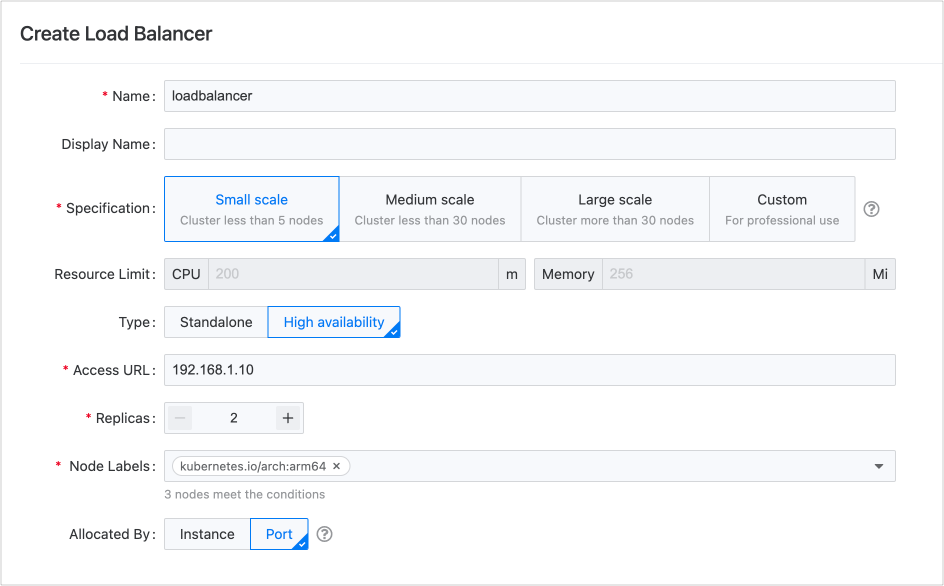

Small Production Environment

For smaller business scales, such as having no more than 5 nodes in the cluster and only used for running standard applications, a single load balancer is sufficient. It is recommended to use it in a high availability mode with at least 2 replicas to ensure stability in the environment.

You can isolate the load balancer using port isolation, allowing multiple projects to share it.

The peak QPS measured in a lab environment for this specification is approximately 300 requests per second.

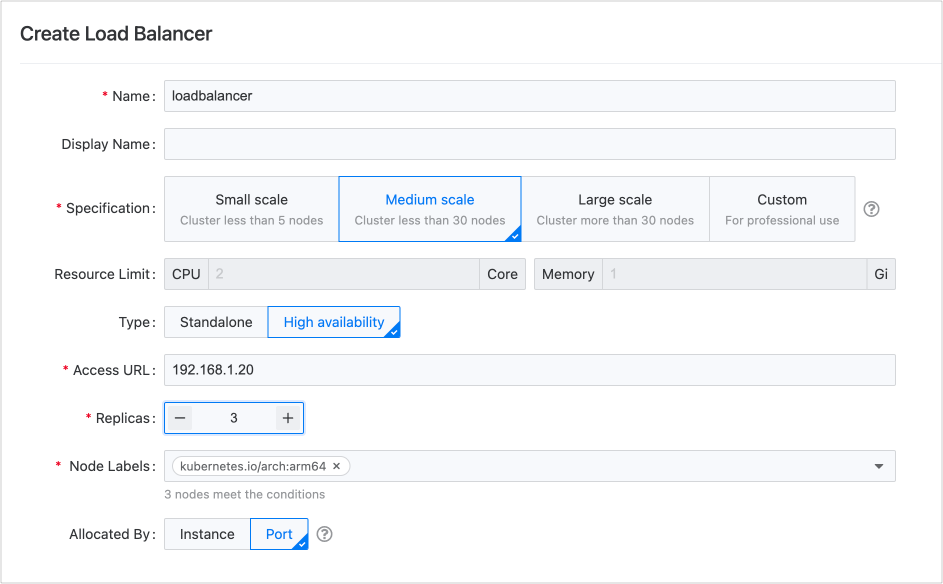

Medium Production Environment

When the business volume reaches a certain scale, such as having no more than 30 nodes in the cluster and needing to handle high-concurrency business alongside running standard applications, a single load balancer will still be adequate. It is advisable to employ a high availability mode with at least 3 replicas to maintain stability in the environment.

You can utilize either port isolation or instance allocation methods to share the load balancer among multiple projects. Of course, you can also create new load balancers for dedicated use by core projects.

The peak QPS measured in a lab environment for this specification is around 10,000 requests per second.

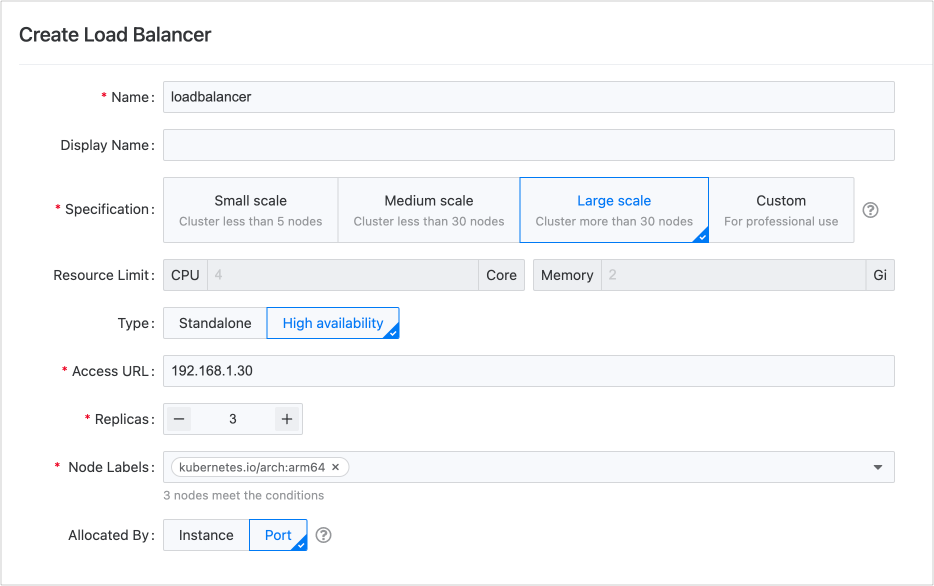

Large Production Environment

For larger business volumes, such as having more than 30 nodes in the cluster and needing to handle high-concurrency business as well as long-lived data connections, it is recommended to use multiple load balancers, each in a high availability type with at least 3 replicas to ensure stability in the environment.

You can isolate the load balancer using either port isolation or instance allocation methods for multiple projects to share it. You may also create new load balancers for exclusive use by core projects.

The peak QPS measured in a lab environment for this specification is approximately 20,000 requests per second.