Deployments

TOC

Understanding Deployments

Refer to the official Kubernetes documentation: Deployments

Deployment is a Kubernetes higher-level workload resource used to declaratively manage and update Pod replicas for your applications. It provides a robust and flexible way to define how your application should run, including how many replicas to maintain and how to safely perform rolling updates.

A Deployment is an object in the Kubernetes API that manages Pods and ReplicaSets. When you create a Deployment, Kubernetes automatically creates a ReplicaSet, which is then responsible for maintaining the specified number of Pod replicas.

By using Deployments, you can:

- Declarative Management: Define the desired state of your application, and Kubernetes automatically ensures the cluster's actual state matches the desired state.

- Version Control and Rollback: Track each revision of a Deployment and easily roll back to a previous stable version if issues arise.

- Zero-Downtime Updates: Gradually update your application using a rolling update strategy without service interruption.

- Self-Healing: Deployments automatically replace Pod instances if they crash, are terminated, or are removed from a node, ensuring the specified number of Pods are always available.

How it works:

- You define the desired state of your application through a Deployment (e.g., which image to use, how many replicas to run).

- The Deployment creates a ReplicaSet to ensure the specified number of Pods are running.

- The ReplicaSet creates and manages the actual Pod instances.

- When you update a Deployment (e.g., change the image version), the Deployment creates a new ReplicaSet and gradually replaces the old Pods with new ones according to the predefined rolling update strategy until all new Pods are running, then it removes the old ReplicaSet.

Creating Deployments

Creating a Deployment by using CLI

Prerequisites

- Ensure you have

kubectlconfigured and connected to your cluster.

YAML file example

Creating a Deployment via YAML

Creating a Deployment by using web console

Prerequisites

Obtain the image address. The source of the images can be from the image repository integrated by the platform administrator through the toolchain or from third-party platforms' image repositories.

-

For the former, the Administrator typically assigns the image repository to your project, and you can use the images within it. If the required image repository is not found, please contact the Administrator for allocation.

-

If it is a third-party platform's image repository, ensure that images can be pulled directly from it in the current cluster.

Procedure - Configure Basic Info

-

Container Platform, navigate to Workloads > Deployments in the left sidebar.

-

Click on Create Deployment.

-

Select or Input an image, and click Confirm.

Note: When using images from the image repository integrated into web console, you can filter images by Already Integrated. The Integration Project Name, for example, images (docker-registry-projectname), which includes the project name projectname in this web console and the project name containers in the image repository.

-

In the Basic Info section, configure declarative parameters for Deployment workloads:

Procedure - Configure Pod

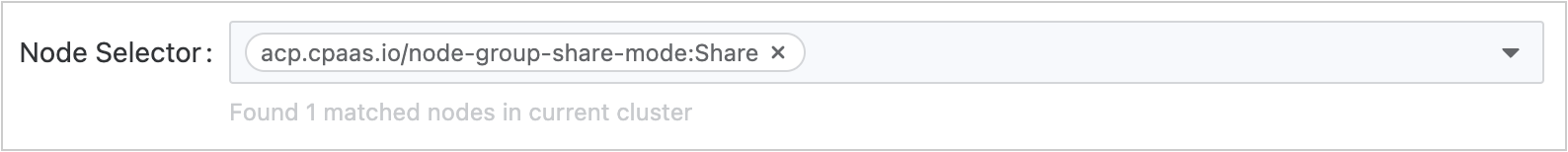

Note: In mixed-architecture clusters deploying single-architecture images, ensure proper Node Affinity Rules are configured for Pod scheduling.

-

Pod section, configure container runtime parameters and lifecycle management:

- Node Affinity Rules

-

Network Configuration

-

Kube-OVN

-

Calico

-

Procedure - Configure Containers

-

Container section, refer to the following instructions to configure the relevant information.

-

Click Add Container (upper right) OR Add Init Container.

See Init Containers. Init Container:

- Start before app containers (sequential execution).

- Release resources after completion.

- Deletion allowed when:

- Pod has >1 app container AND ≥1 init container.

- Not allowed for single-app-container pods.

-

Click Create.

Reference Information

Storage Volume Mounting instructions

Heath Checks

Managing Deployments

Managing a Deployment by using CLI

Viewing a Deployment

-

Check the Deployment was created.

-

Get details of your Deployment.

Updating a Deployment

Follow the steps given below to update your Deployment:

-

Let's update the nginx Pods to use the nginx:1 .16.1 image.

or use the following command:

Alternatively, you can edit the Deployment and change

.spec.template.spec.containers[0].imagefromnginx:1.14.2tonginx:1.16.1: -

To see the rollout status, run:

-

Run kubectl get rs to see that the Deployment updated the Pods by creating a new ReplicaSet and scaling it up to 3 replicas, as well as scaling down the old ReplicaSet to 0 replicas.

-

Running get pods should now show only the new Pods:

-

Scaling a Deployment

You can scale a Deployment by using the following command:

Rolling Back a Deployment

-

Suppose that you made a typo while updating the Deployment, by putting the image name as

nginx:1.161instead ofnginx:1.16.1: -

The rollout gets stuck. You can verify it by checking the rollout status:

Deleting a Deployment

Deleting a Deployment will also delete its managed ReplicaSet and all associated Pods.

Managing a Deployment by using web console

Viewing a Deployment

You can view a deployment to get information of your application.

- Container Platform, and navigate to Workloads > Deployments.

- Locate the Deployment you wish to view.

- Click the deployment name to see the Details, Topology, Logs, Events, Monitoring, etc.

Updating a Deployment

- Container Platform, and navigate to Workloads > Deployments.

- Locate the Deployment you wish to update.

- In the Actions drop-down menu, select Update to view the Edit Deployment page.

Deleting a Deployment

- Container Platform, and navigate to Workloads > Deployments.

- Locate the Deployment you wish to delete.

- In the Actions drop-down menu, Click the Delete button in the operations column and confirm.

Troubleshooting by using CLI

When a Deployment encounters issues, here are some common troubleshooting methods.

Check Deployment status

Check ReplicaSet status

Check Pod status

View Logs

Enter Pod for debugging

Check Health configuration

Ensure livenessProbe and readinessProbe are correctly configured, and your application's health check endpoints are responding properly. Troubleshooting probe failures

Check Resource Limits

Ensure container resource requests and limits are reasonable and that containers are not being killed due to insufficient resources.